[home]

Applications: Similar systems are used in automated conveyer-based systems to discard misshapen parts, sort unripe, ripe and rotten fruit, sort fertilised eggs from not at an egg factory. More sophisticated systems like this can do facial recognition, image stabilisation on live video, market analysis etc.

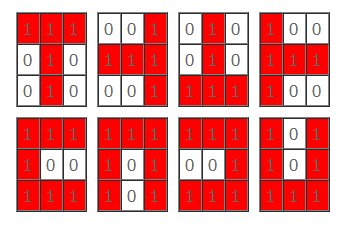

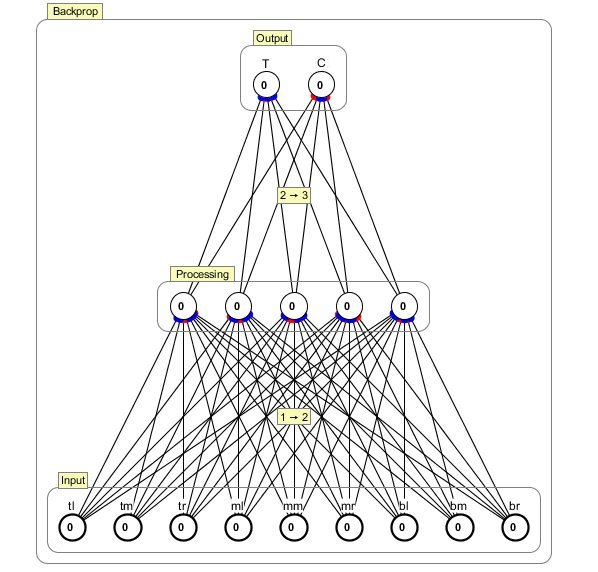

Let's imagine a scenario where we need a neural network that can recognise 2 distinct patterns - let's use the letters "T" and "C", regardless of the orientation (ie. upside down, sideways etc). We humans can do this effortlessly, but we need to engineer a system that LEARNS what these patterns look like before we can automate it.

Limitations: To make this doable in a short period of time, we will GREATLY reduce the resolution of the input scanner to 3x3 (a total of 9 sensors). This idea is scaleable and we will look at data acquisition around larger resolutions later.

Some definitions:

Our scanner grid for INPUT will be of the form

| top left (tl) | top middle(tm) | top right (tr) |

| middle left (ml) | middle middle (mm) | middle right (mr) |

| bottom left (bl) | bottom middle (bm) | bottom right (br) |

Imagine a tiny scanner that can tell the state of each of it's pixels, to detect the pattern it is pointed at.

We will denote an "active" pixel as a "1" and an inactive pixel as a "0"

...so a "regular T" could be encoded as 1,1,1,0,1,0,0,1,0

and a "regular C" could be encoded as 1,1,1,1,0,0,1,1,1

...and so on.

Let's get started:

Download, unzip then run Simbrain (you will need Java also)

The main screen is pretty plain and hints at little of the underlying powr of this program.

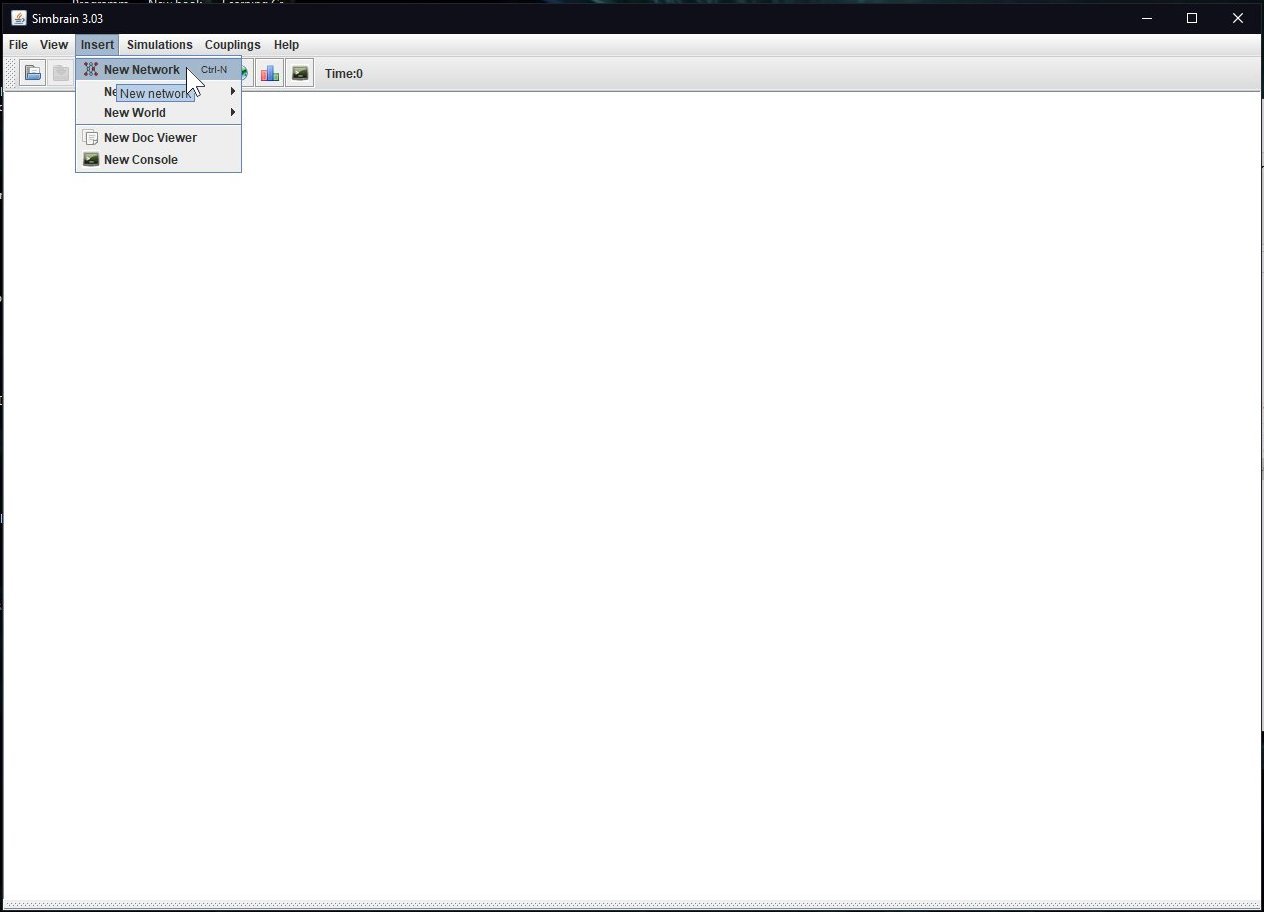

INSERT a NEW NETWORK into your workspace

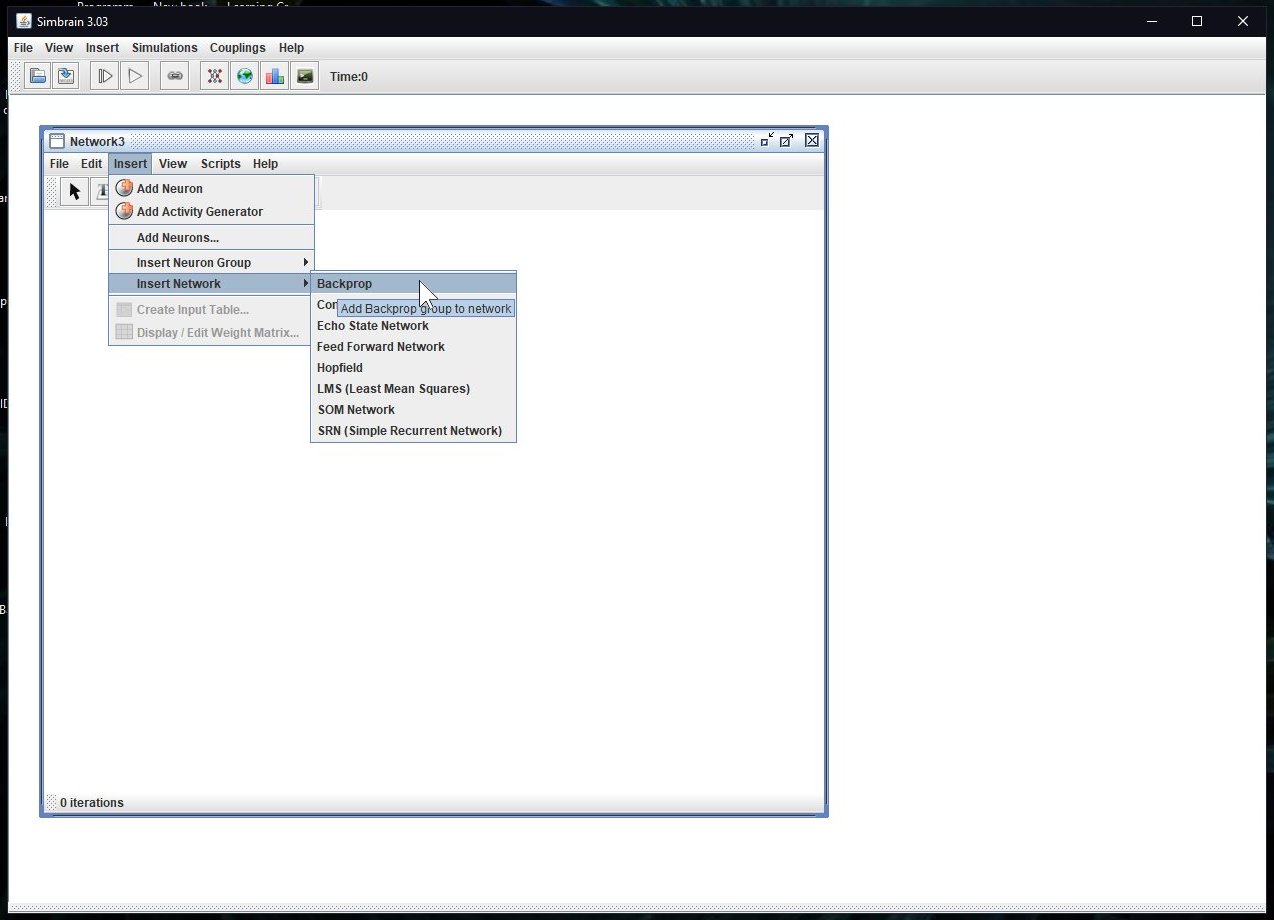

In the resultant window, INSERT -> INSERT NETWORK -> BACKPROP

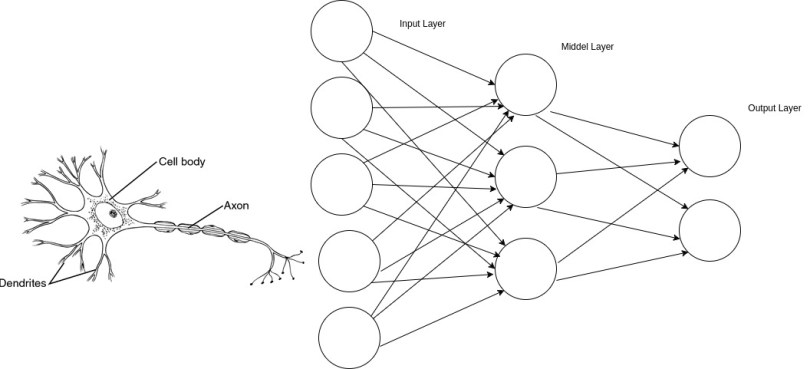

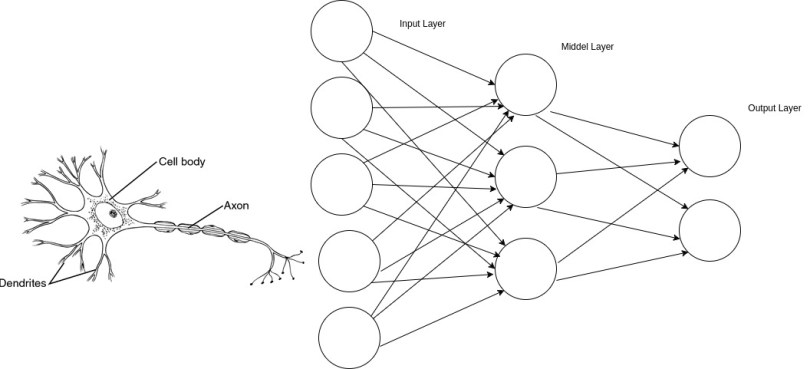

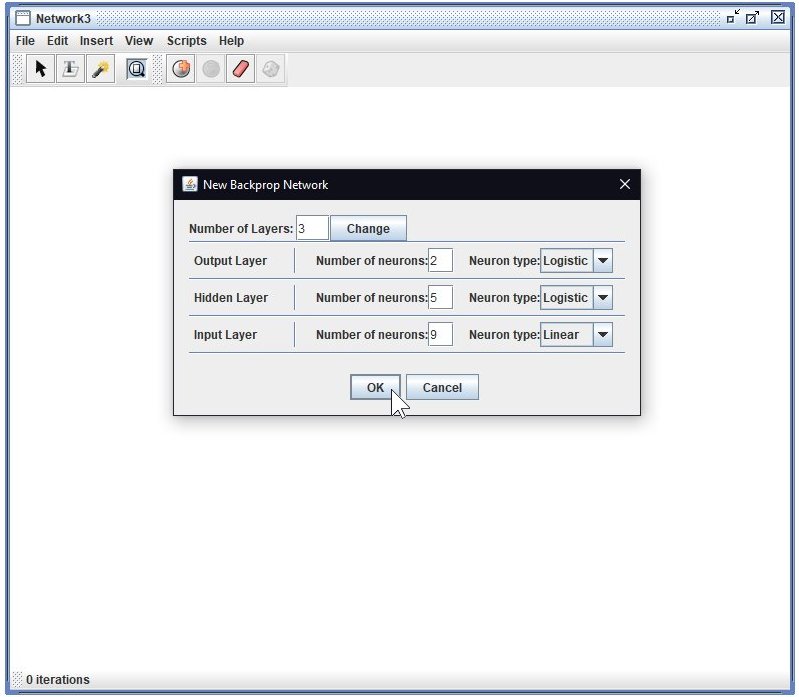

A "backprop" is one flavour of artificial neural network. Briefly, it's methods of learning involves sending errors back through the network (back-propagating) to adjust those pathways that contributed to the error, making it less incorrect....anyways, you will be asked to specify the TOPOLOGY of the backprop.

You need to nominate how many INPUT neurons there will be, how many OUTPUT neurons and what the hidden layer structure will be. There is hugely complicated maths methods for working out the optimum number of middle layers and neurons in those laters, byt let's ignore that - our TC netwoprk task is not that complex, we will need remarkably few neurons to learn it.

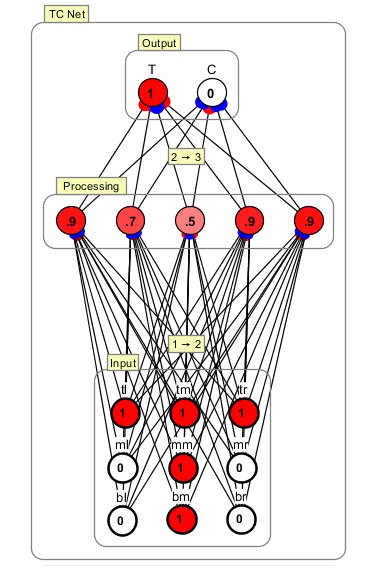

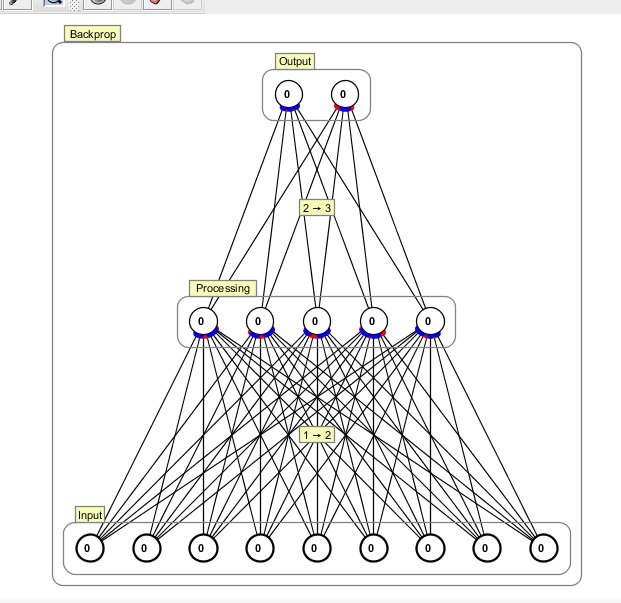

Our TC network needs a 9 pixel input scanner, we need 2 outputs (the network will tell us either a "T" or a "C"), and Simbrain will suggest 5 "Hidden" (or processing) layer neurons - we shall go with that. You can see here however you could sculpt a huge, multi-layer complex network to solve a more complex problem - we will, later.

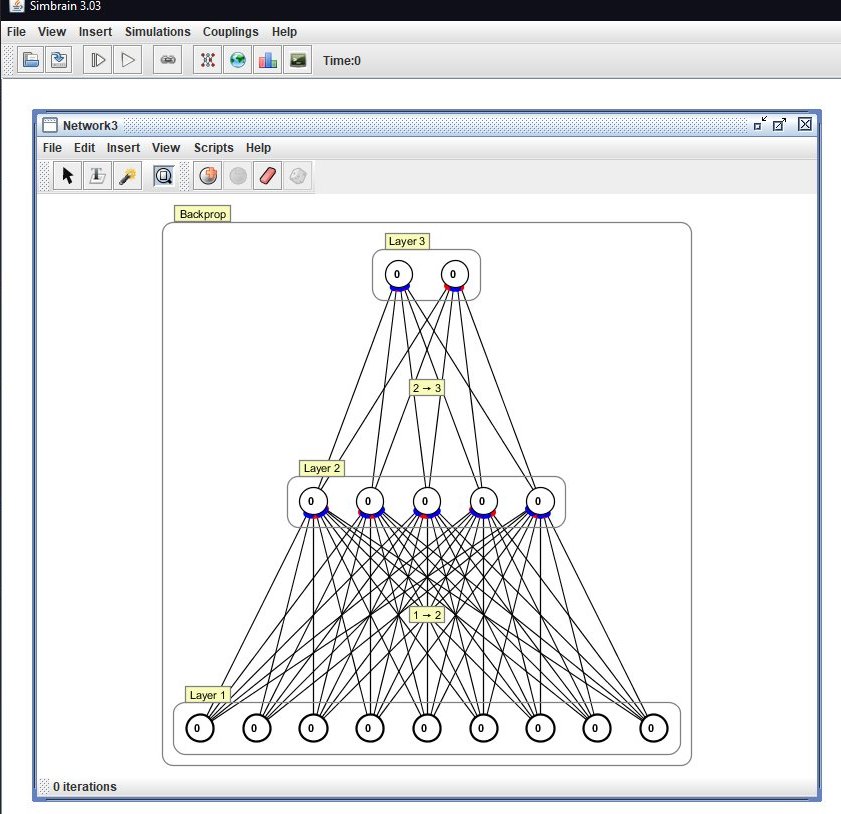

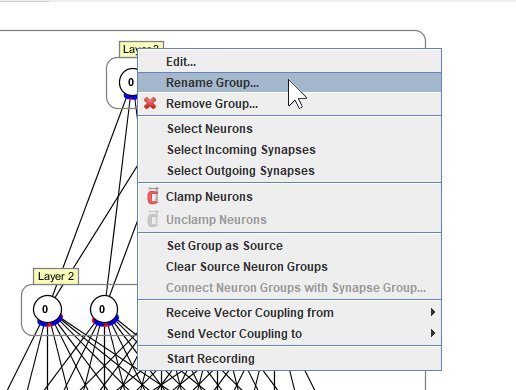

One characteristic of a basic backprop is that every neuron in one layer is connected to every neuron on the next layer, and so on. We then go about "humanizing" the network by naming bits. R-CLICK on the LAYER label and choose "Rename Group"

label OUTPUT, PROCESSING and INPUT layers like so:

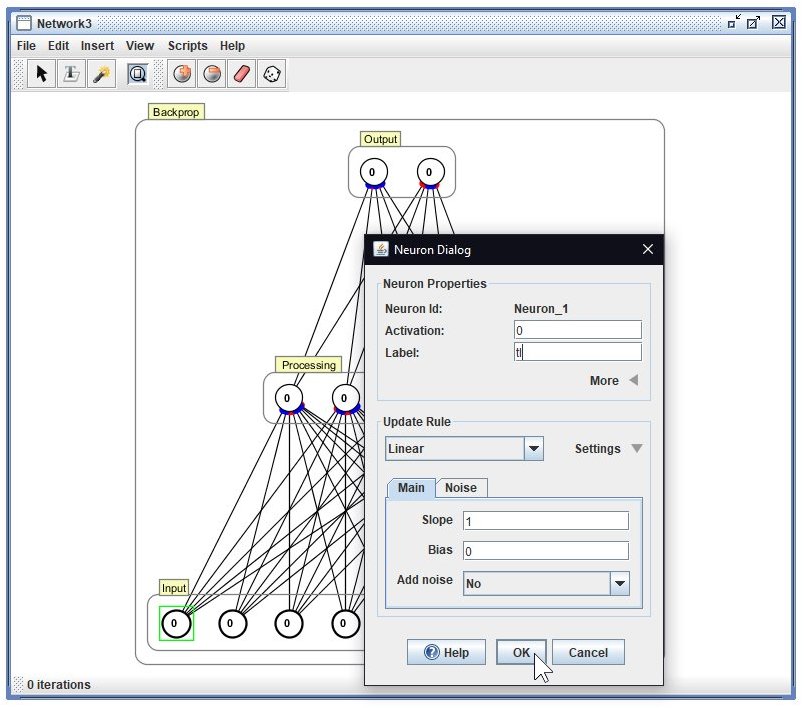

To make the neurons easier to refer to, we can rename them by DOUBLE-CLICKING on each circle in the INPUT layer, call them tl, tm, tr, ml, mm, mr, bl, bm, br

Name the 2 neurons on othe OUTPUT layer "T" and "C", like so ...

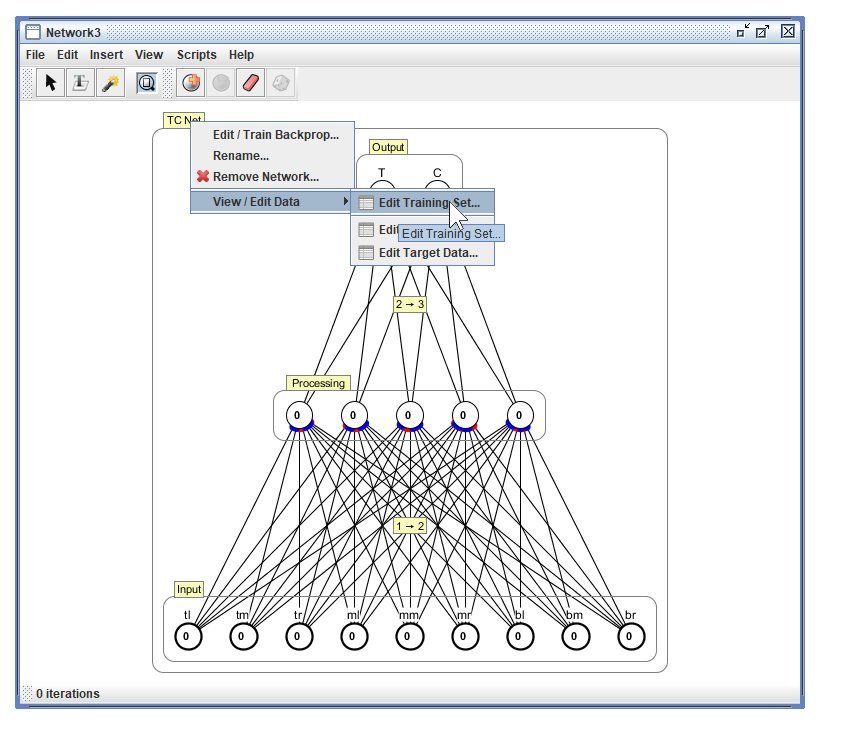

Now we have the network ready, we have to add TRAINING RULES for the network. R-CLICK on the NETWORK LABEL and choose View/Edit Data -> Edit Training Set

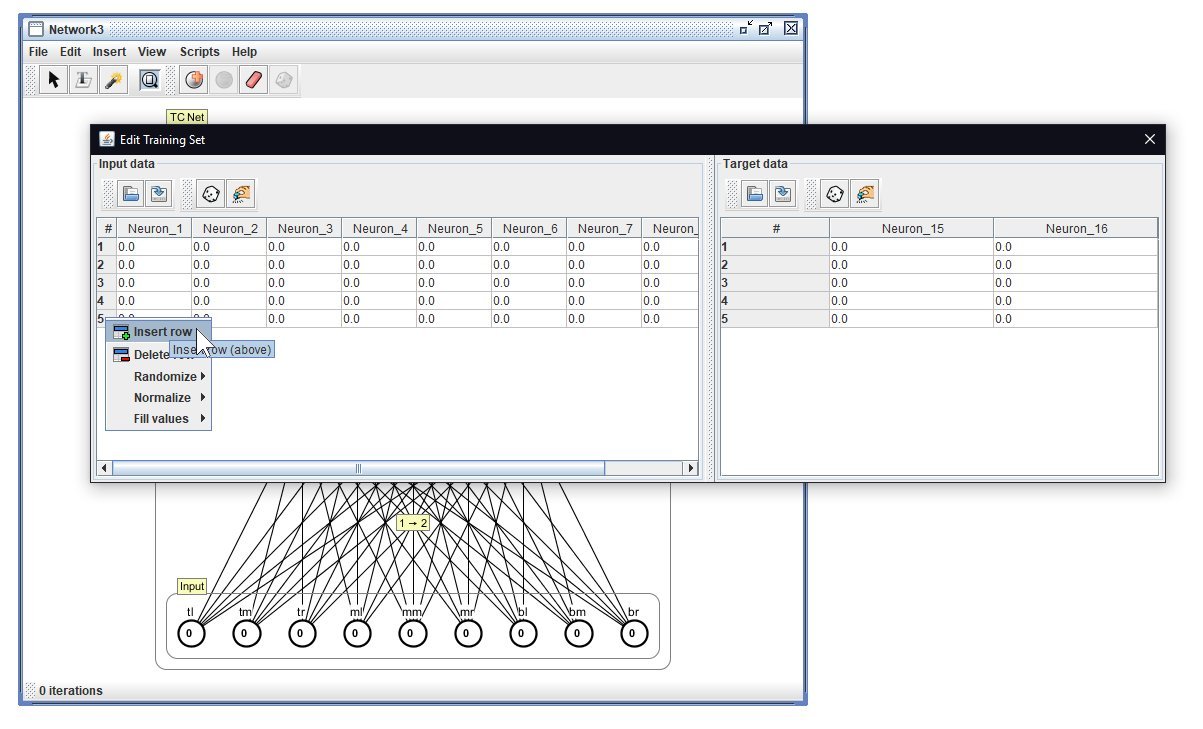

Simbrain will suggest we have 5 training rules (or 5 lines of data in the INPUT and TARGET tables) - we need MORE. R-CLICK on the line marker for "5" and INSERT ROW - do this until we have 8 rows of data in BOTH the input and TARGET data windows. Row1 represents the inputs and output for the first letter - we have 8 rules to learn (2 letters, 4 orientations each).

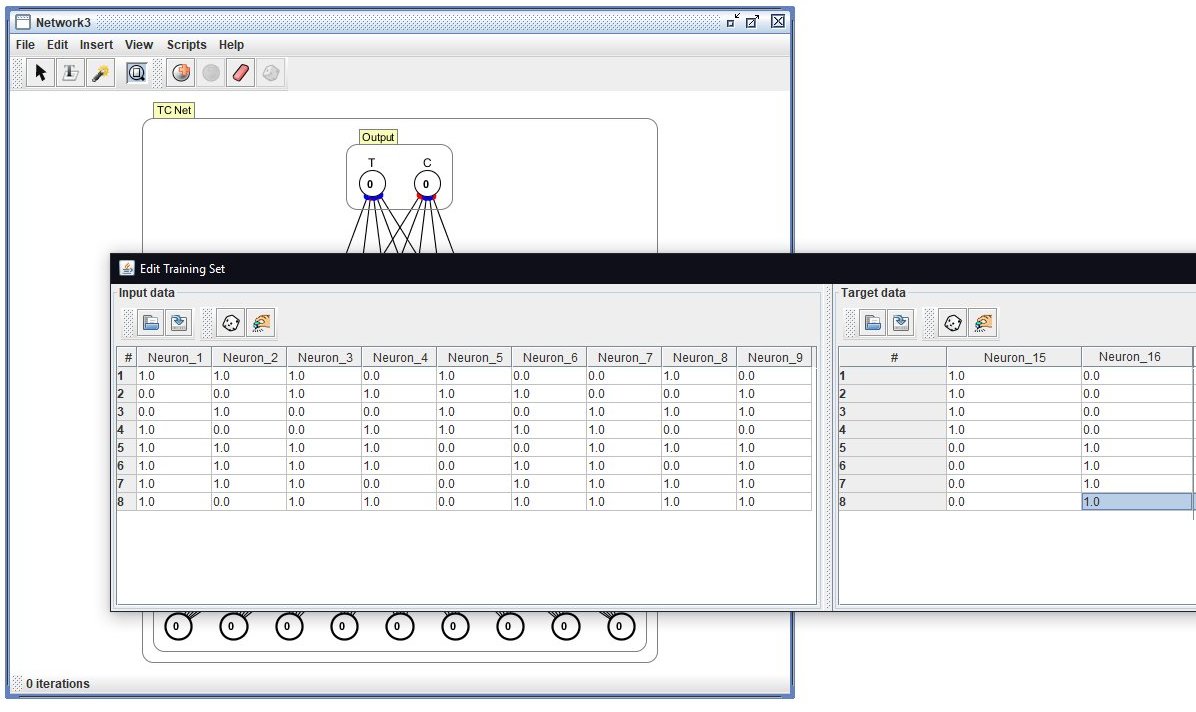

Insert data as shown below. Alternatively, IMPORT the INPUT CSV and the OUTPUT CSV (download these first - handy to have copies of your data incase stuff happens)

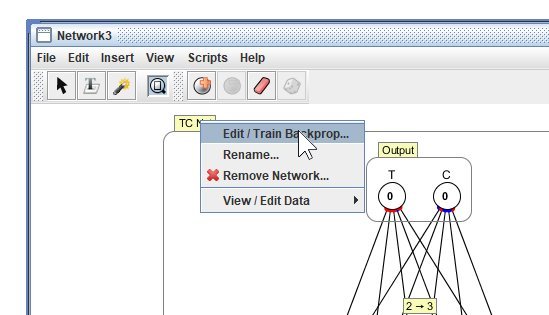

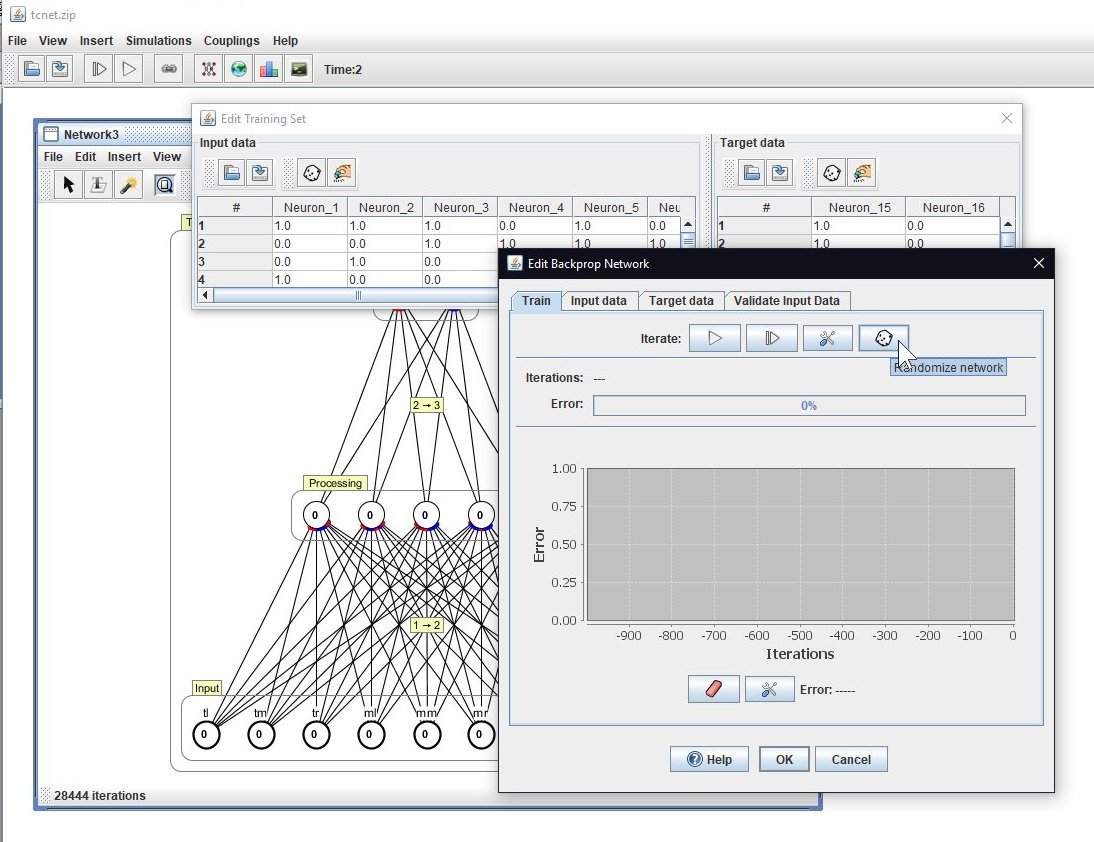

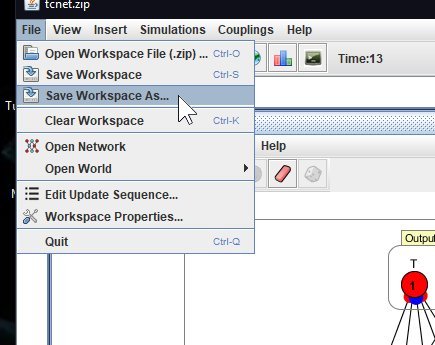

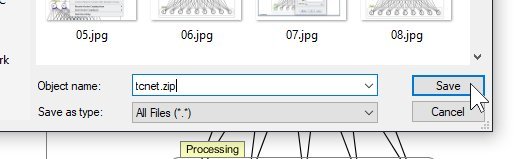

SAVE your WORKSPACE and then you are ready to let your baby learn. R-CLICK on the NETWORK LABEL and choose Edit/Train Backprop...

SOME THEORY: The CONNECTIONS between neurons and the neuron activation level is what does the SIGNAL PROCESSING that is a neural network run. The CONNECTIONS can be either "GET EXCITED = RED" or "CALM DOWN = BLUE", and each have numberic values (in this case from -1..+1). We start a neural network's learning process by RANDOMIZING the network connections - no two kids in the room will have the same network after this. That is great (remember, we ALL have different brains, but we are all capable of learning the same things, but we will ALL use different neurons to do this. Analogue computing is mesy, does not follow any easy to explain patterns but works just fine). PRESS RANDOMIZE to mix up your networks brain BEFORE you attempt to train it.

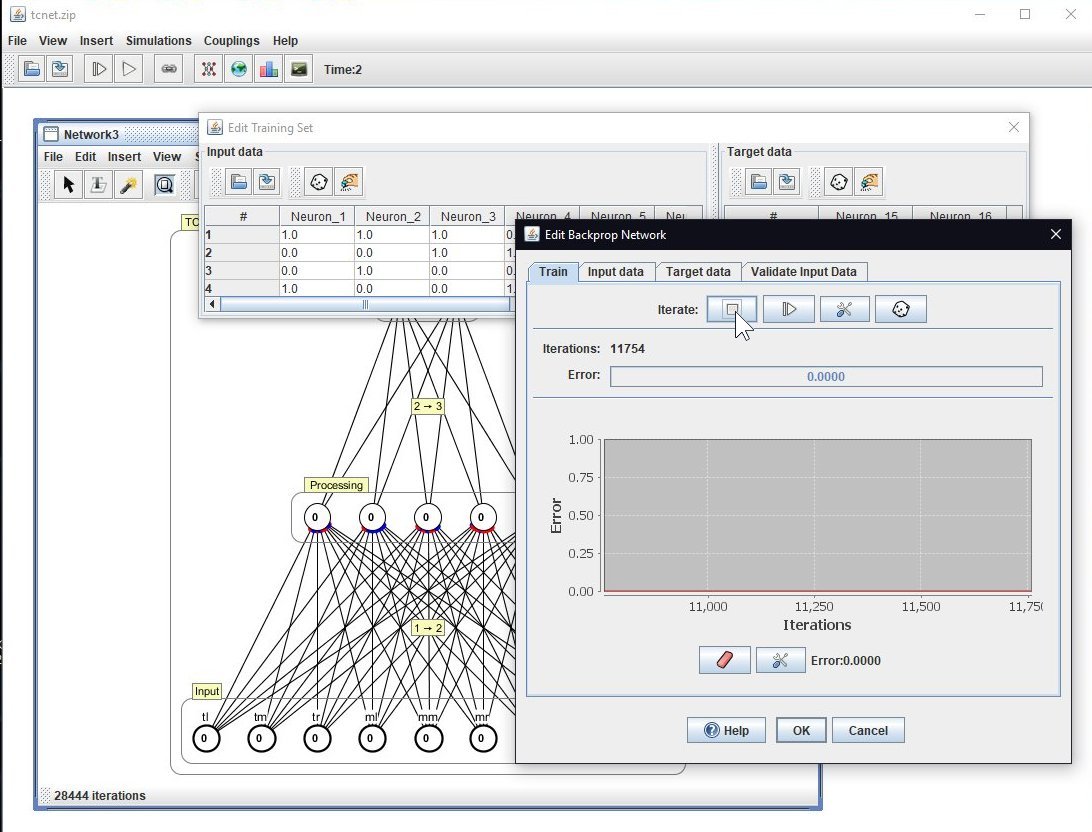

SOME MORE THEORY: Learning is all about gradually changing the connections so that known inputs result in known outputs. The network, when training will, at great speed, pass data through the network, measure how wrong the answer was, passing that error back through the network changing connections in the process and re-doing this many many times. It is a LOT like SIMULTANEOUS EQUATIONS in maths. PRESS TRAIN (the PLAY button) until the error is fairly low. NOTE - the error does not need to get to 0 for the network to have learned your patterns.

Your network error should go towards 0 - remember it does not need to be 0.0000 but if your network does not decrease in error you may have a random combination that actually prevents your network from learning the things (a retarded network tha needs to be RANDOMIZED again to improve the learning rate). Press teh STOP button when learning has finished (it was the START button before you pressed it to start learning).

SOME things take neural networks a LONG time to learn. Commercial neural networks are typically trained on SUPERCOMPUTERS, and can take WEEKS to learn a series of patterns to an acceptable error level.

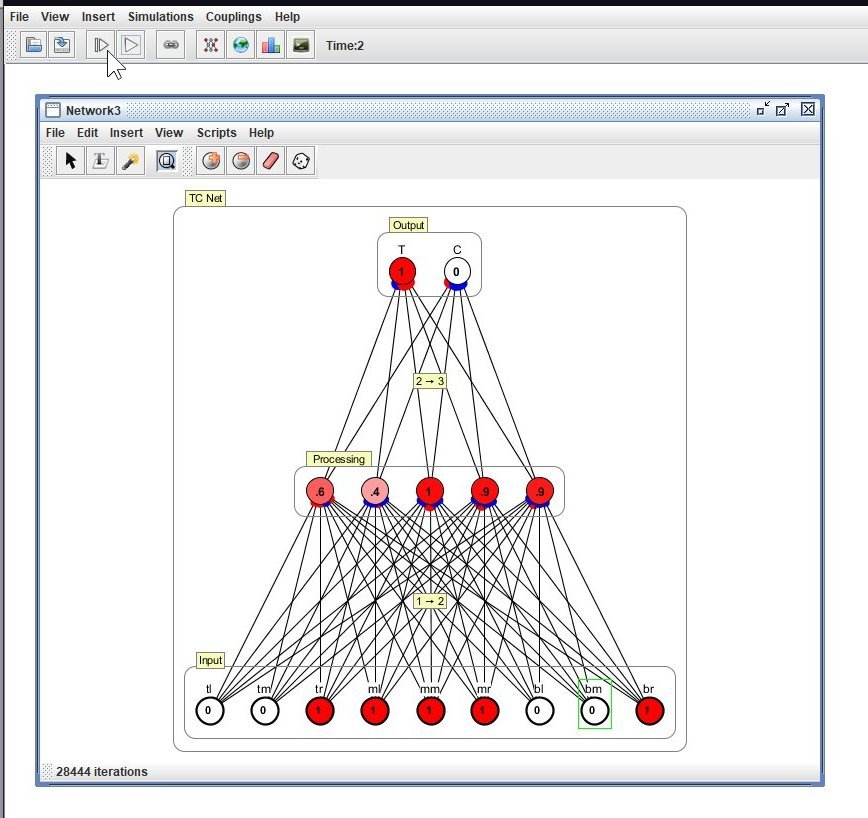

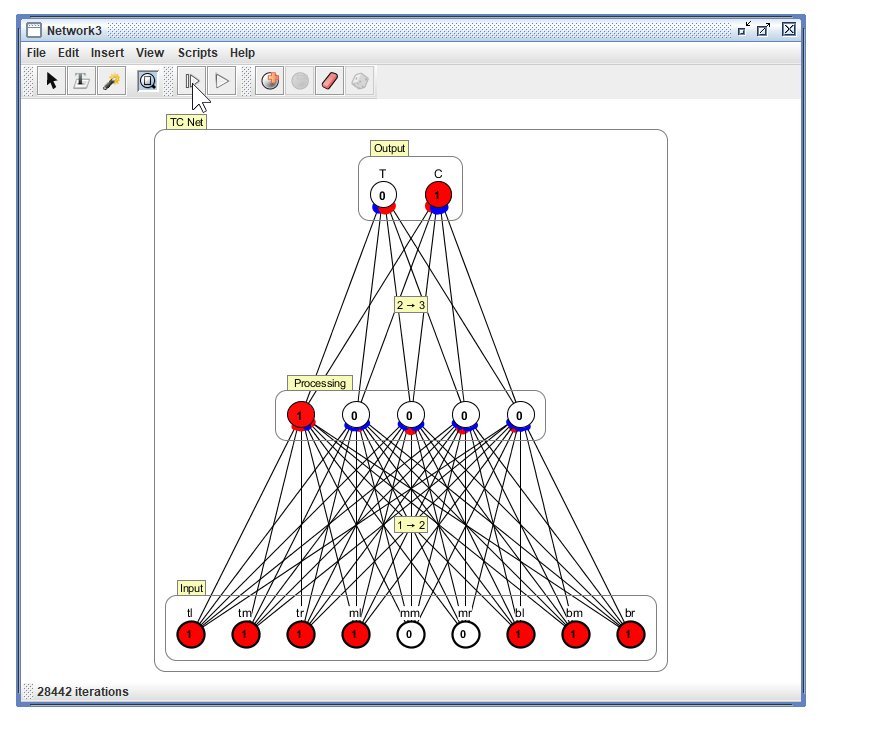

Your network is now ready to use. You now need to add a pattern of 1's and 0's in the INPUT layer (you can SHIFT-CLICK a bunch of them, then when you double click you open up a group dialogue - change the ACTIVATION to either 0 or 1. To see what the answer is to that pattern input, press the STEP PLAY button on the main window - one or the other OUTPUR neuron should light up, corresponding to the answer.

Below is a "lazy" T - a T on it's side, correctly identified:

Below is a regular C, correctly identified:

Remember, SAVE your WORKSPACE to keep your network and data - it will save as a ZIP file containing a bunch of things including the XML file that is your network.

Further Notes:

To use more complex patterns, I have written a SCANNER that lets you fre-form draw and DIGITIZE the image, compiling lines of input data to CSV files that make the training set easier to compile.

You can also re-arrange your neurons in the interface to make it simpler to visualise the patterns you are trying to learn - click-dragging individual neurons, or click-drag selection rectangles around groups of them lets you drag them around like this: